Just a week ago, I was on a call with a DeepEval user who told me she considers testing and evaluating large language models (LLMs) as distinct concepts. When asked what was her definition of LLM testing, this was what she said:

Evaluating LLMs to us is more about choosing the right LLMs through benchmarks, whereas LLM testing is more about exploring the unexpected things that can go wrong in different scenarios.

Since I’ve already written quite an article on everything you need to know about LLM evaluation metrics, for this article we’ll dive into how to use these metrics for LLM testing instead. We’ll explore what LLM testing is, different test approaches and edge cases to look out for, highlight best practices for LLM testing, as well as how to carry out LLM testing through DeepEval, the open-source LLM testing framework.

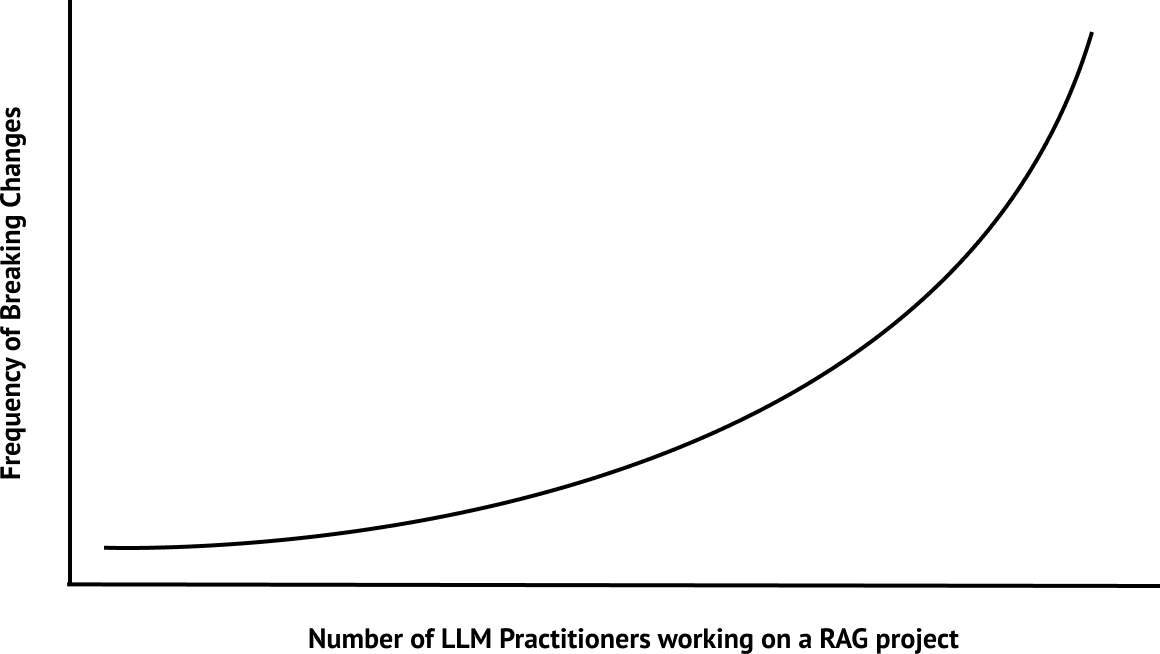

And before I forget, here's my go to "graph" to explain the importance of testing, especially for unit testing AI agents.

Convinced? Let's dive right in.

What is LLM Testing?

LLM testing is the process of evaluating an LLM output to ensure it meets all the specific assessment criteria (such as accuracy, coherence, fairness and safety, etc.) based on its intended application purpose. It is vital a robust testing approach can be used to evaluate and regression test LLM systems at scale.

Evaluating LLMs is a complicated process because, unlike traditional software development where outcomes are predictable and errors can be debugged as logic can be attributed to specific code blocks, LLMs are a black-box with infinite possible inputs and corresponding outputs.

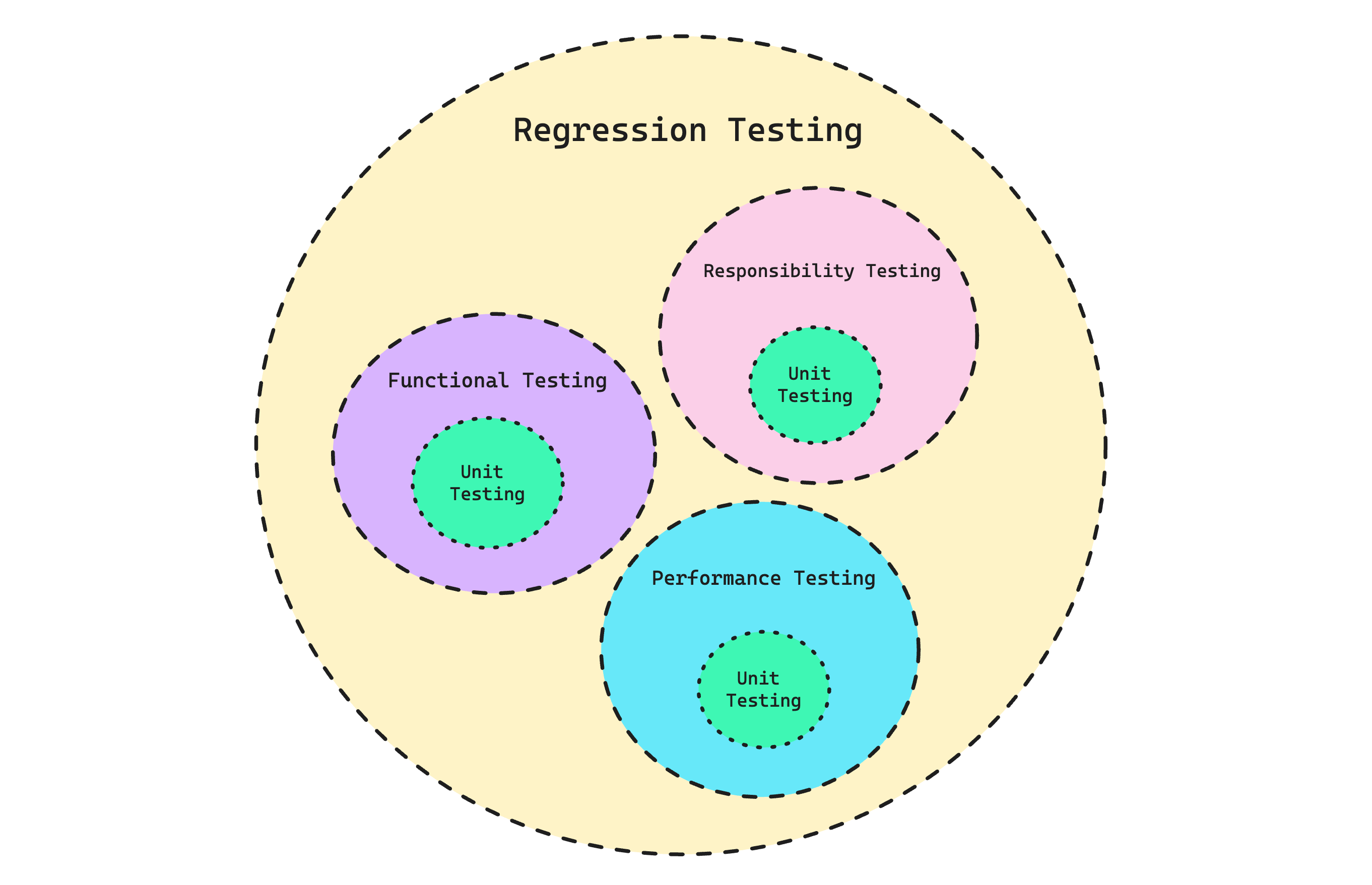

However, that’s not to say concepts from traditional software testing don’t carry over to testing LLMs— they are merely different. Unit tests make up functional, performance, and responsibility tests, of which they together make up a regression test for your LLM.

Unit Testing

Unit testing involves testing the smallest testable parts of an application, which for LLMs means evaluating an LLM response for a given input, based on some clearly defined criteria.

For example, for a unit test where you’re trying to assess the quality of an LLM generated summary, the criteria could be whether the summary contains enough information, and whether it contains any hallucinations from the original text. The scoring of a criteria, is done by something known as an LLM evaluation metric (more on this later).

You can choose to implement your own LLM testing framework, but in this article we’ll be using DeepEval to create and evaluate unit test cases:

pip install deepevalThen, create an LLM test case:

from deepeval.test_case import LLMTestCase

original_text="""In the rapidly evolving digital landscape, the

proliferation of artificial intelligence (AI) technologies has

been a game-changer in various industries, ranging from

healthcare to finance. The integration of AI in these sectors has

not only streamlined operations but also opened up new avenues for

innovation and growth."""

summary="""Artificial Intelligence (AI) is significantly influencing

numerous industries, notably healthcare and finance."""

test_case = LLMTestCase(

input=original_text,

actual_output=summary

)Here, input is the input to your LLM, while the actual_output is the output of your LLM.

Lastly, evaluate this test case using DeepEval's summarization metric:

export OPENAI_API_KEY="..."from deepeval.metrics import SummarizationMetric

...

metric = SummarizationMetric(threshold=0.5)

metric.measure(test_case)

print(metric.score)

print(metric.reason)

print(metric.is_successful())Functional Testing

Functional testing LLMs involves evaluating LLMs on a specific task. As opposed to traditional software functional testing (which, for example, would involve verifying whether a user is able to login by testing the entire login flow), functional testing for LLMs assesses the model’s proficiency across a range of inputs within a particular task (eg. text summarization). In other words, functional tests are made up of multiple unit tests for a specific use case.

To group unit tests together to perform functional testing, first create a test file:

touch test_summarization.pyThe example task we’re using here is text summarization. Then, define the set of unit test cases:

from deepeval.test_case import LLMTestCase

# Hypothetical test data from your test dataset,

# containing the original text and summary to

# evaluate a summarization task

test_data = [

{

"original_text": "...",

"summary": "..."

},

{

"original_text": "...",

"summary": "..."

}

]

test_cases = []

for data in test_data:

test_case = LLMTestCase(

input=data.get("original_text", None),

actual_output=data.get("input", None)

)

test_cases.append(test_case)Lastly, loop through the unit test cases in bulk, using DeepEval’s Pytest integration, and execute the test file:

import pytest

from deepeval.metrics import SummarizationMetric

from deepeval import assert_test

...

@pytest.mark.parametrize(

"test_case",

test_cases,

)

def test_summarization(test_case: LLMTestCase):

metric = SummarizationMetric()

assert_test(test_case, [metric])deepeval test run test_summarization.pyNote that the robustness of your functional test, is entirely dependent on your unit test coverage. Therefore, you should aim to cover as many edge cases as possible when constructing your unit tests for a particular functional test. Also if you're using Confident AI with DeepEval, you get an entire testing suite out-of-the-box for free (docs here):

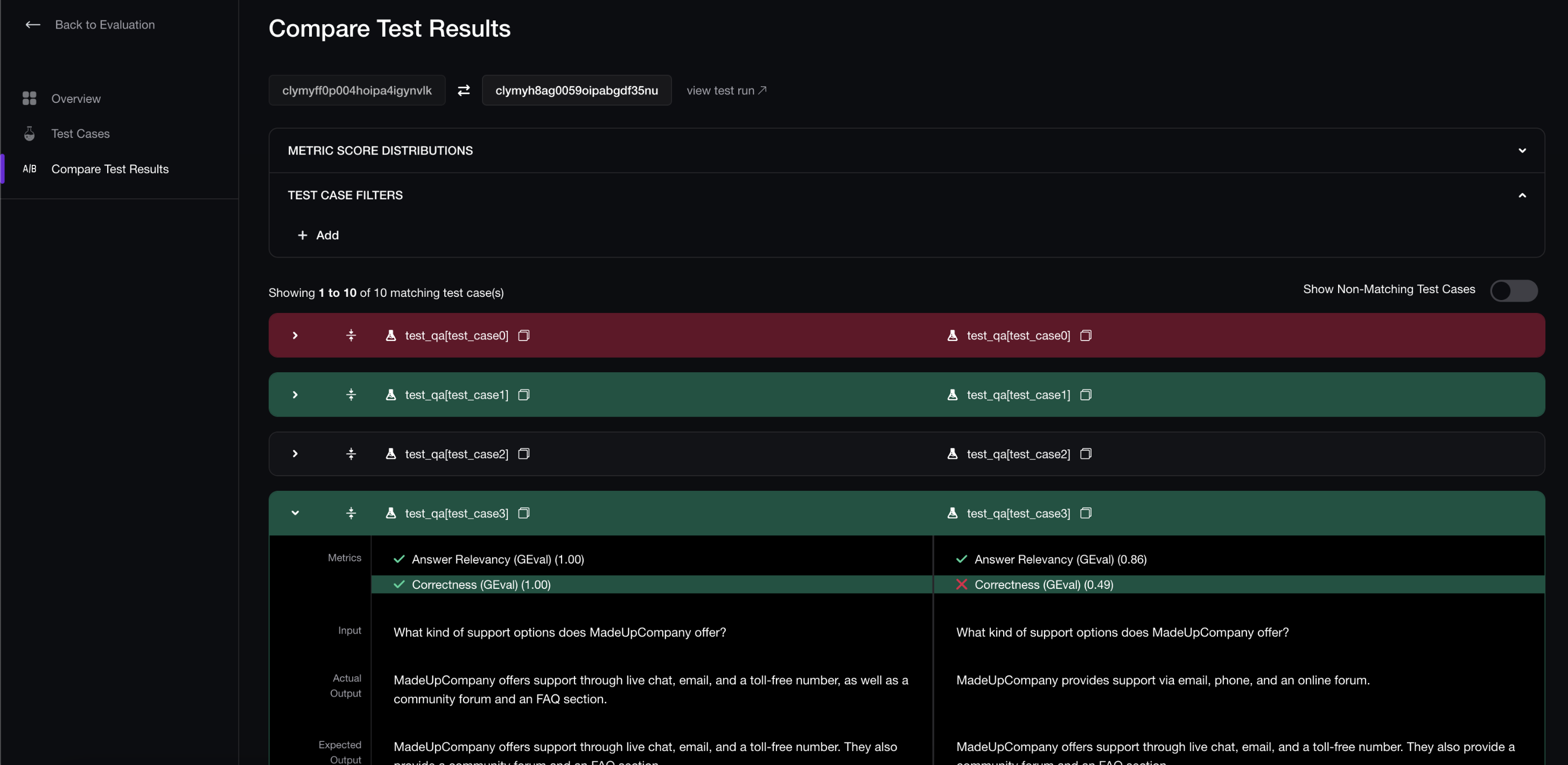

Confident AI: The DeepEval LLM Evaluation Platform

The leading platform to evaluate and test LLM applications on the cloud, native to DeepEval.