Constructing a large-scale, comprehensive dataset to test LLM outputs can be a laborious, costly, and challenging process, especially if done from scratch. But what if I told you that it’s now possible to generate the same thousands of high-quality test cases you spent weeks painstakingly crafting, in just a few minutes?

Synthetic data generation leverages LLMs to create quality data without the need to manually collect, clean, and annotate massive datasets. With models like GPT-4, it's now possible to synthetically produce datasets that are more comprehensive and diverse than human-labeled ones, in far less time, which can be used to benchmark LLM (systems) with the help of some LLM evaluation metrics.

In this article, I’ll teach you everything you need to know on how to use LLMs to generate synthetic datasets (which for example can be used to evaluate RAG pipelines). We’ll explore:

Synthetic generation methods (Distillation and Self-Improvement)

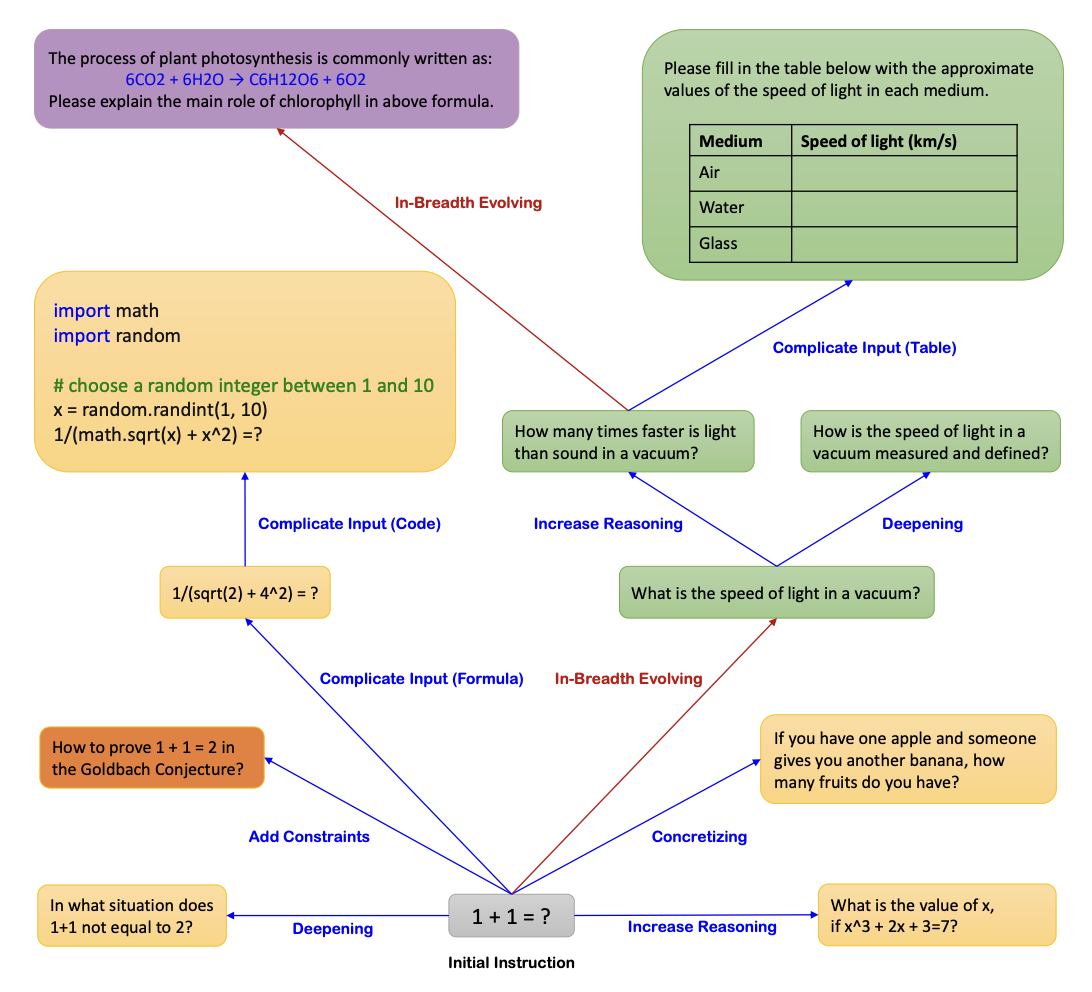

What data evolution is, various evolution techniques, and its role in synthetic data generation

A step-by-step tutorial on creating high-quality synthetic data from scratch using LLMs.

How to use DeepEval to generate synthetic datasets in under 5 lines of code.

Intrigued? Let’s dive in.

What is Synthetic Data Generation, Using LLMs?

Synthetic data generation using LLMs involves using an LLM to create artificial data, which often are datasets that can be used to train, fine-tune, and even evaluate LLMs themselves. Generating synthetic datasets is not only faster than scouring public datasets and cheaper than human annotation but also results in higher quality and data diversity, which is also imperative for LLM red teaming.

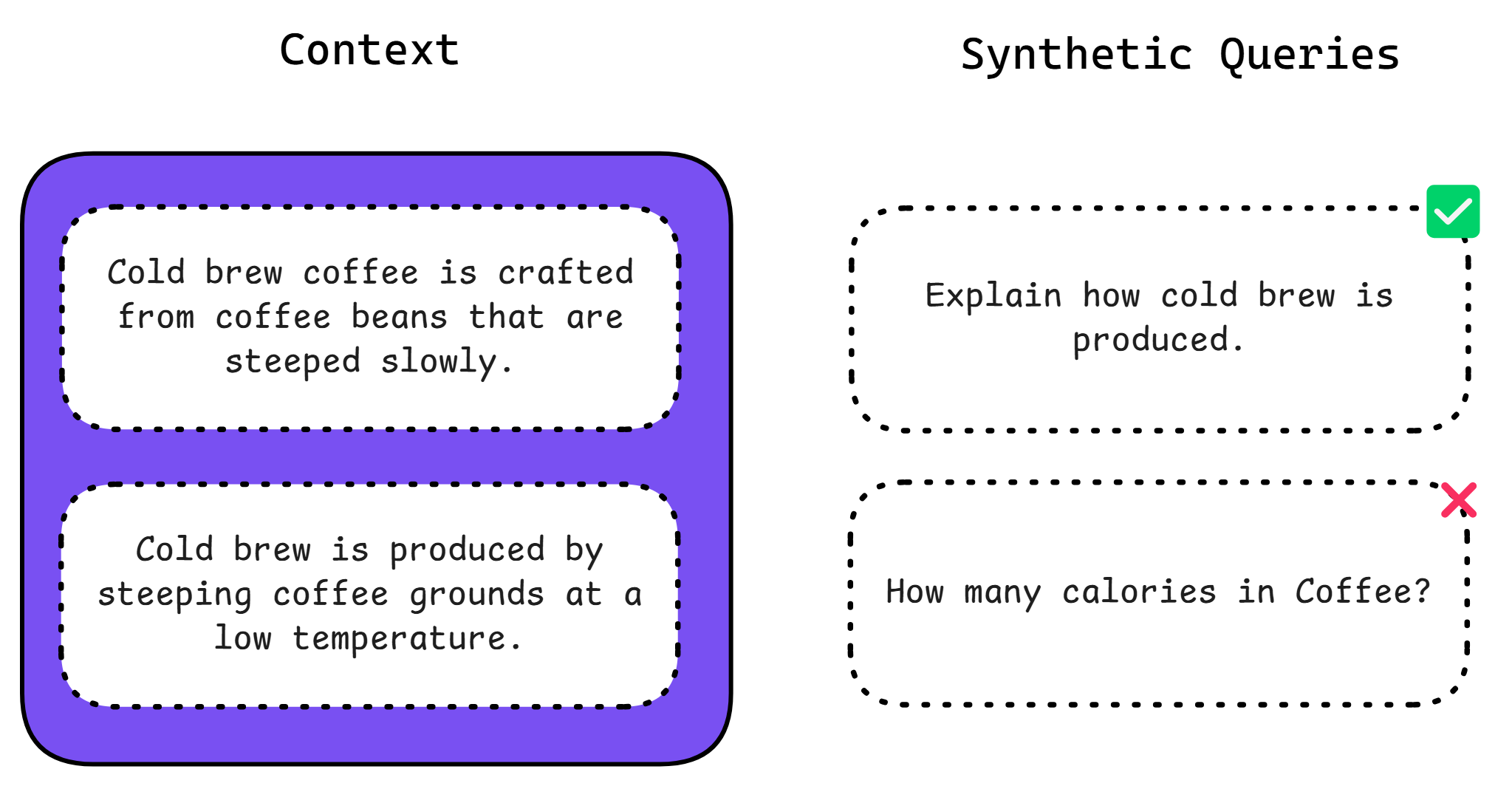

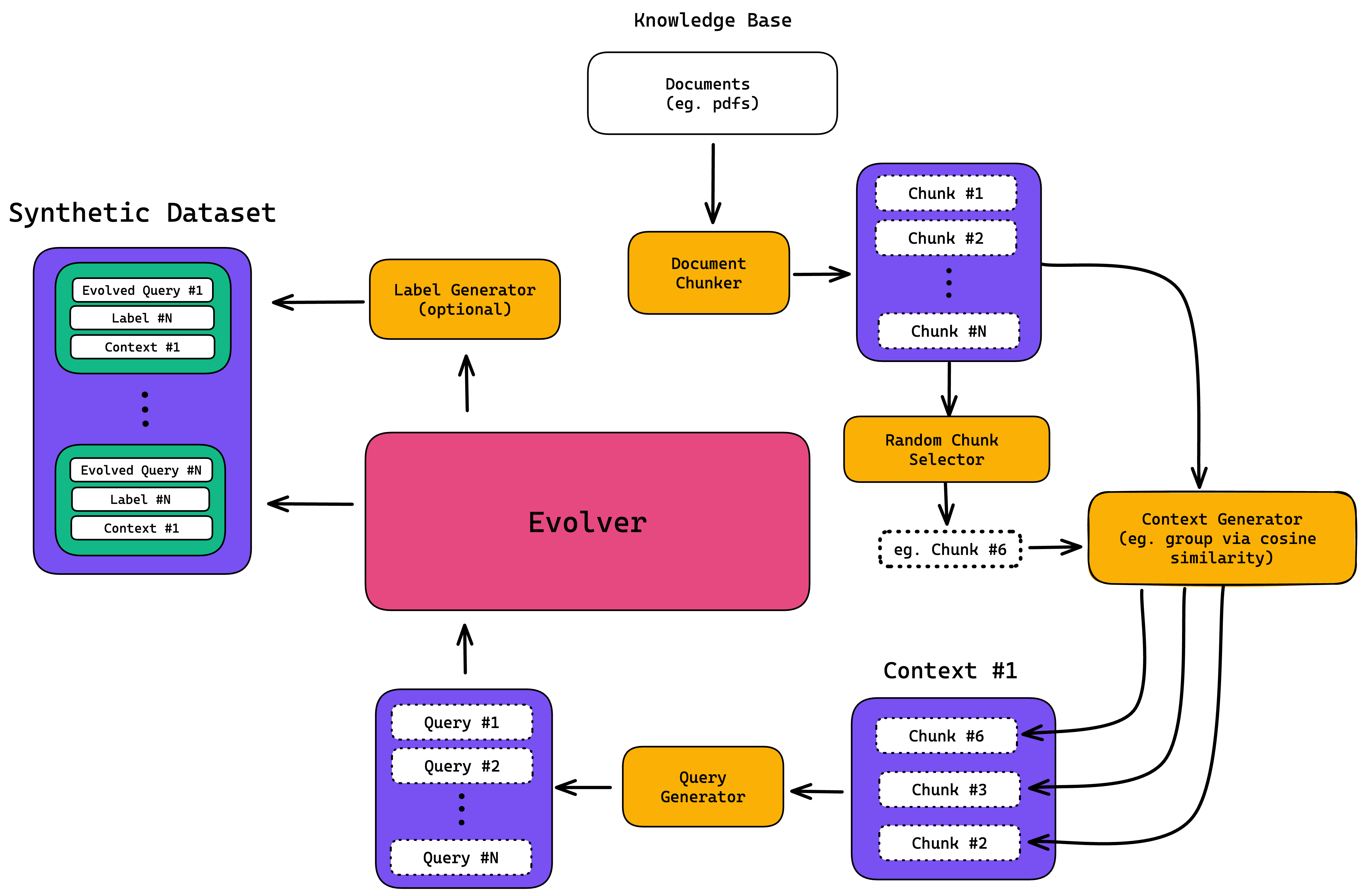

The process starts with the creation of synthetic queries, which are generated using context from your knowledge base (often in the form of documents) as the ground truth. The generated queries are then "evolved" multiple times to complicate and make realistic, and when combined with the original context it was generated from, makes up your final synthetic dataset. Although optional, you can also choose to generate a target label for each synthetic query-context pair, which will act as the expected output of your LLM system for a given query.

When it comes to generating a synthetic dataset for evaluation, there are two main methods: self-improvement from using your model’s output, or distillation from a more advanced model.

Self-improvement: involves your model generating data iteratively from its own output without external dependencies

Distillation: involves using a stronger model to generate synthetic data for to evaluate a weaker model

Self-improvement methods, such as Self-Instruct or SPIN, are limited by a model’s capabilities and may suffer from amplified biases and errors. In contrast, distillation techniques are only limited by the best model available, ensuring the highest quality generation.

Generating Data from your Knowledge Base

The first step in synthetic data generation involves creating synthetic queries from your list of contexts, which are sourced directly from your knowledge base. Essentially, contexts serve as the ideal retrieval contexts for your LLM application, much like how expected outputs serve as ground truth references for your LLM’s actual outputs. For those who wants something working immediately, here is how you can generate high quality synthetic data using DeepEval, the open-source LLM evaluation framework (⭐github here: https://github.com/confident-ai/deepeval):

from deepeval.synthesizer import Synthesizer

synthesizer = Synthesizer()

synthesizer.generate_goldens_from_docs(

document_paths=['example.txt', 'example.docx', 'example.pdf'],

)Constructing Contexts

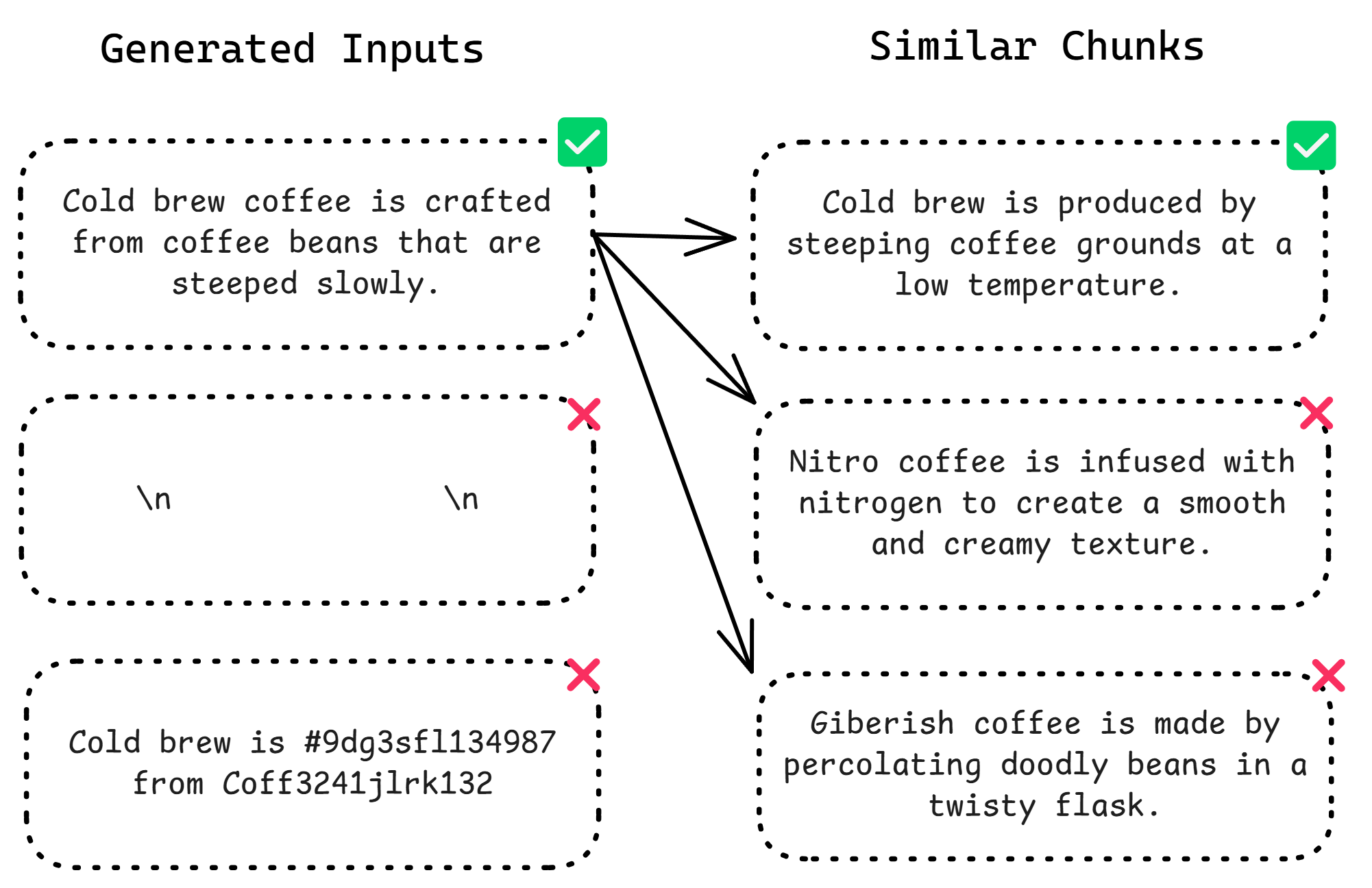

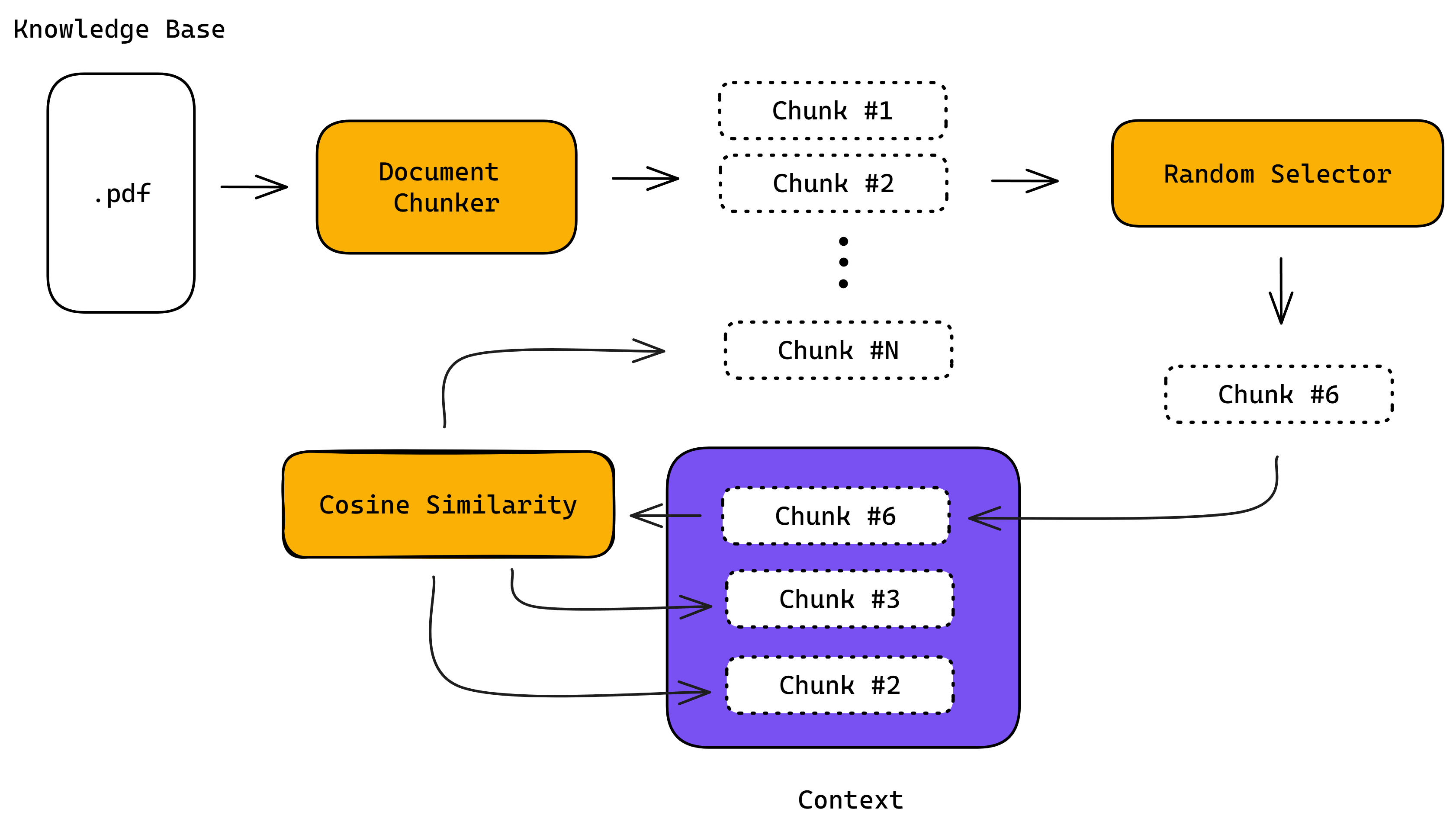

During context generation, a document — or multiple documents — from your knowledge base is divided into chunks using a token splitter. A random chunk is then selected, and additional chunks are retrieved and grouped with the selected one based on their similarity.

Similarity can be calculated using several methods:

Utilizing similarity or distance algorithms, such as cosine similarity

Employing knowledge graphs

Leveraging LLMs themselves, which, though less realistic, offer the most accuracy

Applying clustering techniques to identify patterns

Using machine learning models to predict groupings based on features.

In any case, the overarching goal remains the same: to aggregate similar chunks of information effectively.

To ensure that these groupings are effective and align with the specific needs of your LLM application, it’s best practice to mirror your application’s retriever logic in the context generation process. This includes carefully considering aspects such as the token-splitting method, chunk size, and chunk overlap.

Such alignment guarantees that the synthetic data behaves consistently with your application’s expectations, preventing any skew in outcomes due to differences in retriever complexity.

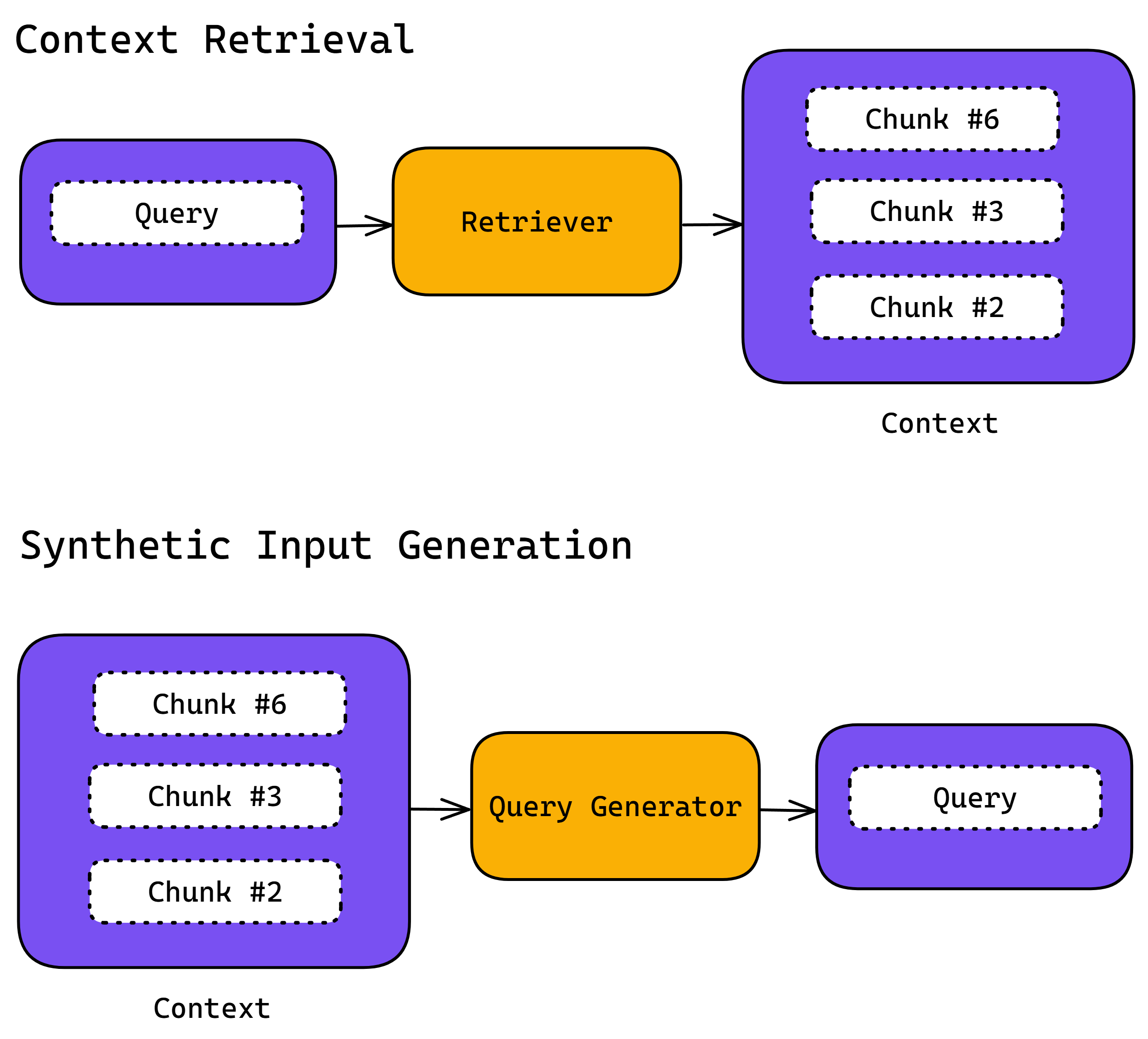

Generating Synthetic Inputs from Contexts

Once your contexts have been created, synthetic inputs are subsequently generated from them. This method reverses the standard retrieval operation—instead of locating contexts based on inputs, inputs are created based on predefined contexts. This ensures that every synthetic input directly corresponds to a context, enhancing relevance and accuracy.

Additionally, these contexts are used to optionally produce the expected outputs by aligning them with the synthetically generated inputs.

This asymmetric approach ensures that all components — inputs, outputs, and contexts — are perfectly synchronized.

Confident AI: The DeepEval LLM Evaluation Platform

The leading platform to evaluate and test LLM applications on the cloud, native to DeepEval.