Your LLM app is streaming out tokens faster than you can count. Thousands of words, millions of letters. Every. Single. Day. But here’s the billion-dollar question: are they any good?

Now imagine manually reviewing every single one. Staying up at 2am reading them line by line, checking for accuracy, spotting bias, and making sure they’re even relevant — it’s exhausting just thinking about it.

That’s why LLM-as-a-Judge exists — to replace that manual slog with automated scoring against custom evaluation criteria you define. Here are just a few examples:

Answer Relevancy – Did the response actually address the user’s request?

Helpfulness – Was it genuinely useful, or just pretty sentences with no substance?

Faithfulness – Did it stick to the facts instead of wandering into hallucinations?

Bias – Was it balanced, or did subtle skew creep in?

Correctness – Was it accurate from start to finish?

But, LLM judges do have their limitations, and using it without caution will cause you nothing but frustration.

In this article, I’ll let you in on everything there is to know about using LLM judges for LLM (system) evaluations so that you no longer have to stay up late to QA LLM quality.

Feeling judgemental? Let's begin.

Tl;DR

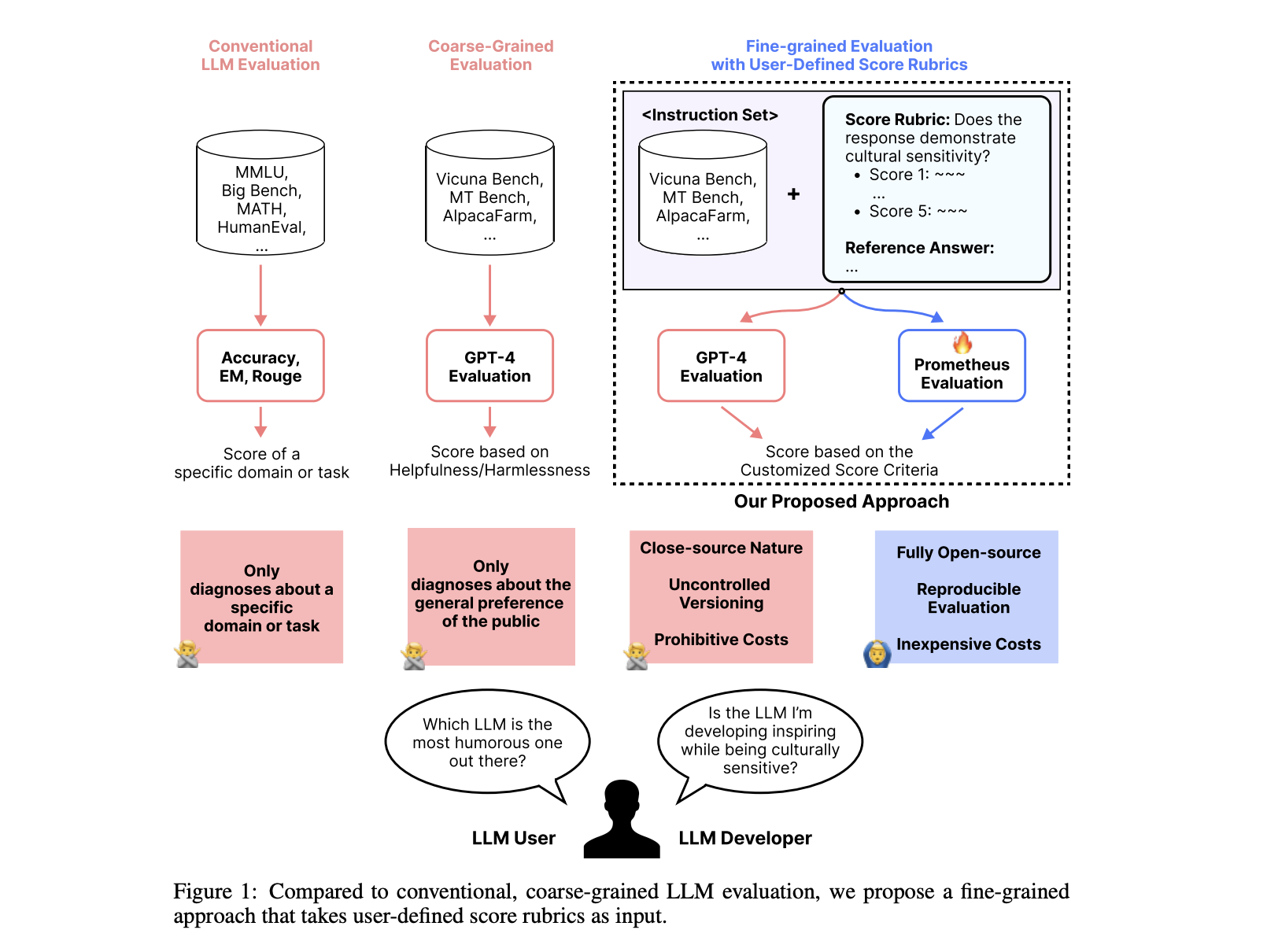

LLM-as-a-Judge is the most scalable, accurate, and reliable way to evaluate LLM apps when compared to human annotation and traditional scores like BLEU and ROUGE (backed by research)

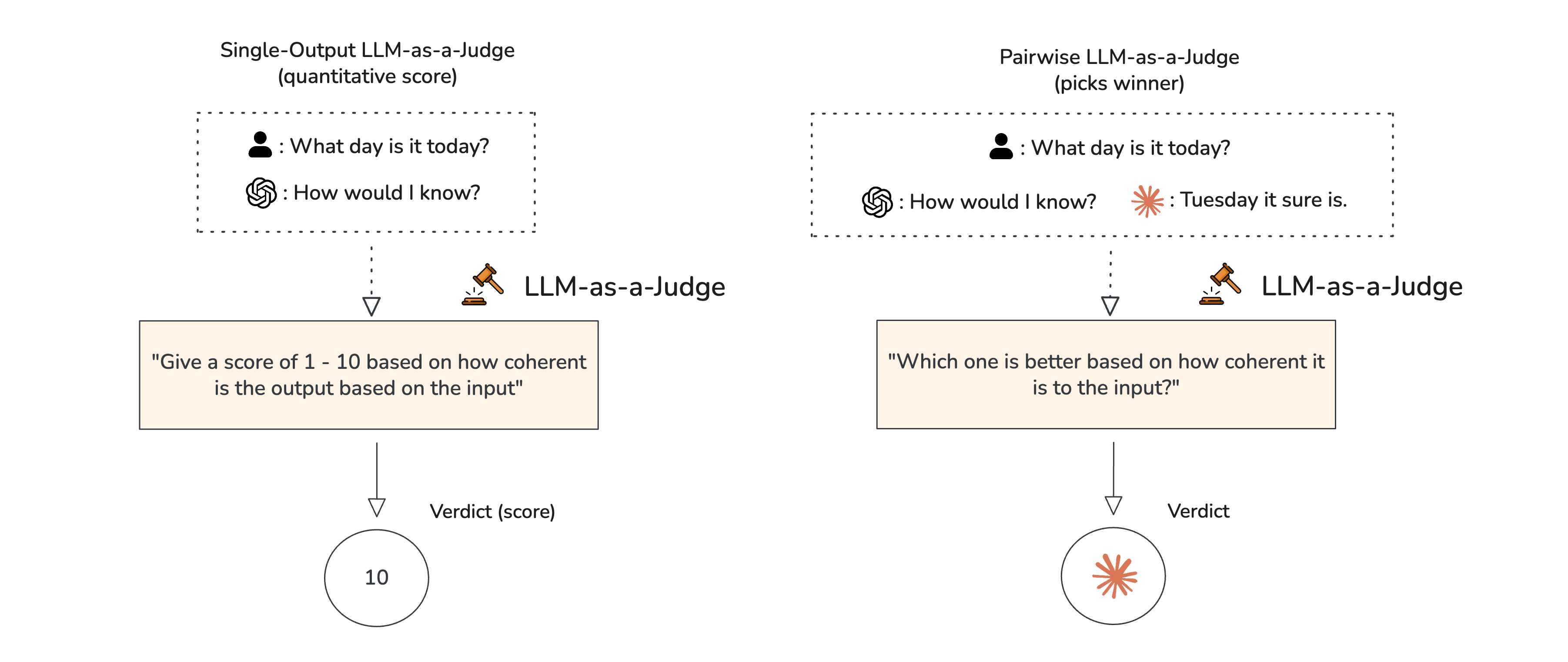

There are two types of LLM-as-a-Judge, "single output" and "pairwise".

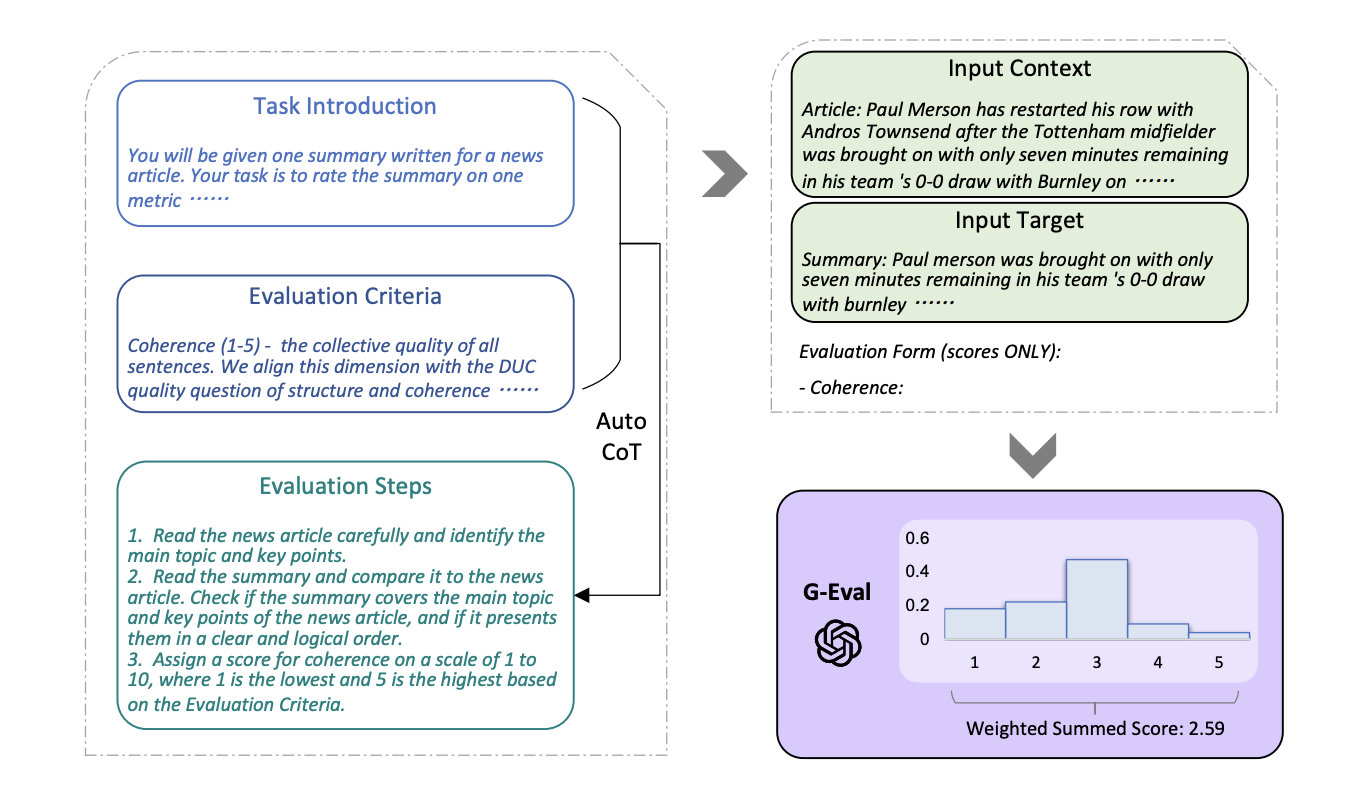

Single output LLM-as-a-Judge scores individual LLM outputs by outputting a score based on a custom criterion. They can be referenceless, or reference-based (requires a labelled output), and G-Eval is current one of the most common ways to create custom judges.

Pairwise LLM-as-a-judge on the other hand does not output any score, but instead choose a "winner" out of a list of different LLM outputs for a given input, much like the automated version of LLM arena.

Techniques to improve LLM judges include CoT prompting, in-context learning, swapping positions (for pairwise judges), and much more.

DeepEval (100% OS ⭐https://github.com/confident-ai/deepeval) allows anyone to implement both single output and pairwise LLM-as-a-Judge in under 5 lines of code, via G-Eval and Arena G-Eval respectively.

What exactly is “LLM as a Judge”?

LLM-as-a-Judge is the process of using LLMs to evaluate LLM (system) outputs, and it works by first defining an evaluation prompt based on any specific criteria of your choice, before asking an LLM judge to assign a score based on the input and outputs of your LLM system.

There are many types of LLM-as-a-judges for different use cases:

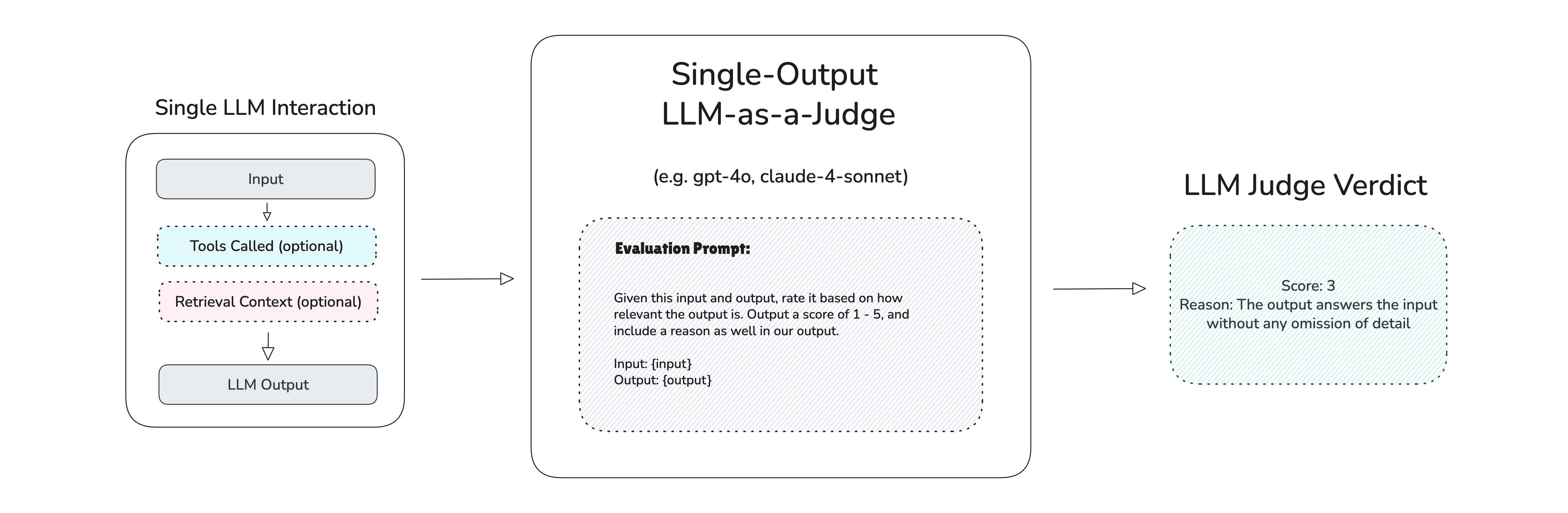

Single-output: Either reference-based or referenceless, takes in a single or multi-turn LLM interaction and outputs a quantitative verdict based on the evaluation prompt.

Pairwise: Similar to LLM arena, takes in a single or multi-turn LLM interaction and outputs which LLM app gave the better output.

We'll go through all of these in a later section, so don't worry about these too much right now.

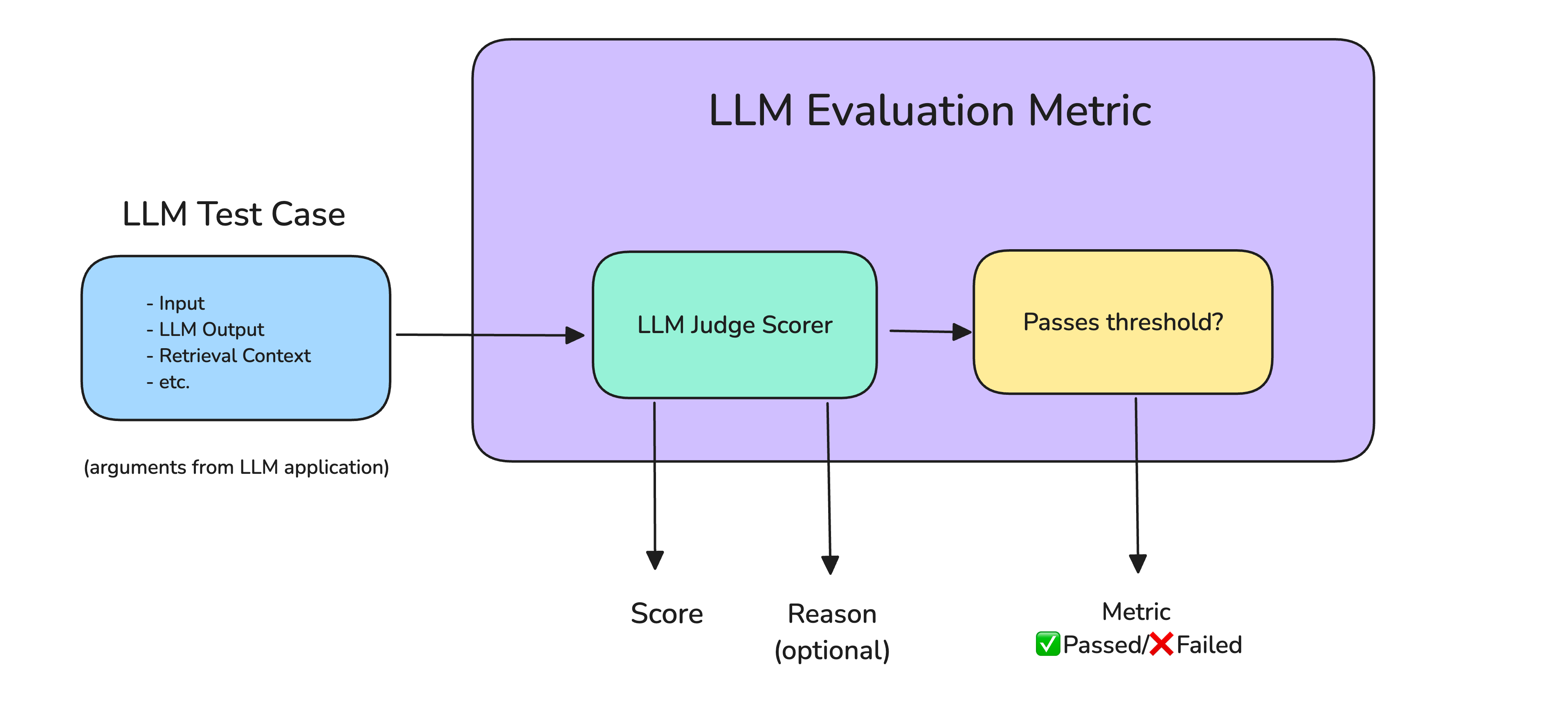

In the real world, LLM judges are often time used as scorers for LLM-powered evaluation metrics such as G-Eval, answer relevancy, faithfulness, bias, and more. These metrics are then often times used to evaluate different LLM systems such AI agents. The concept is straightforward: provide an LLM with an evaluation criterion, and let it do the grading for you.

But how and where exactly would you use LLMs to judge LLM responses?

LLM-as-a-judge can be used as an automated grader/scorer for your chosen evaluation metric. To get started:

Choose the LLM you want to act as the judge.

Give it a clear, concise evaluation criterion or scoring rubric.

Provide the necessary inputs - usually the original prompt, the generated output, and any other context it needs.

The judge will then return a metric score (often between 0 and 1) based on your chosen parameters. For example, here’s a prompt you could give an LLM judge to evaluate summary coherence:

prompt = """

You will be given one summary (LLM output) written for a news article.

Your task is to rate the summary on how coherent it is to the original text (input).

Original Text:

{input}

Summary:

{llm_output}

Score:

"""By collecting these metric scores, you can build a comprehensive set of LLM evaluation results to benchmark, monitor, and regression-test your system. LLM-as-a-Judge is gaining popularity because the alternatives fall short — human evaluation is slow and costly, while traditional metrics like BERT or ROUGE miss deeper semantics in generated text.

It makes sense: How could we expect traditional, much smaller NLP models to effectively judge not just paragraphs of open-ended generated text, but also content in formats like Markdown or JSON?

LLM-as-a-Judge Use Cases

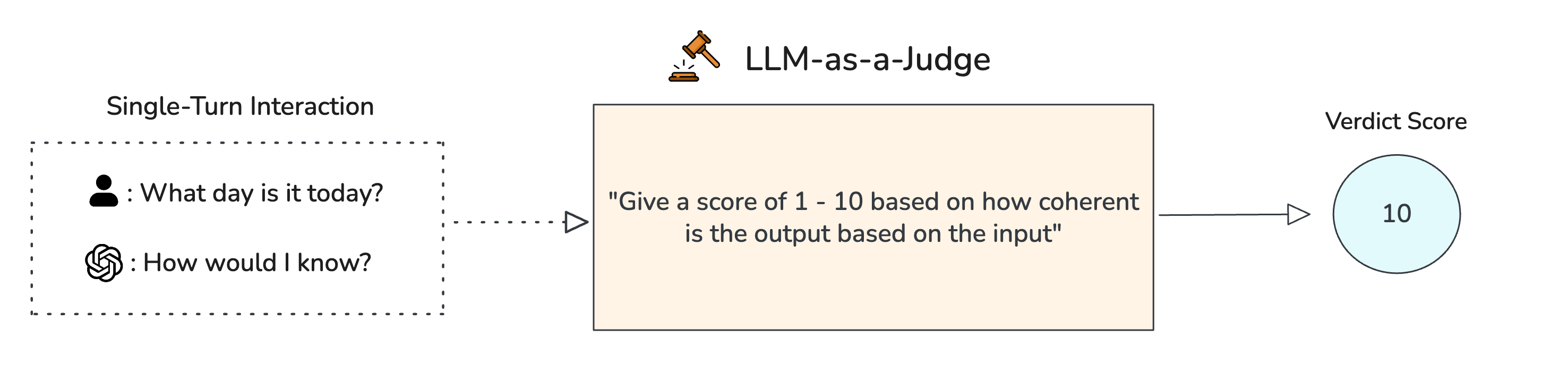

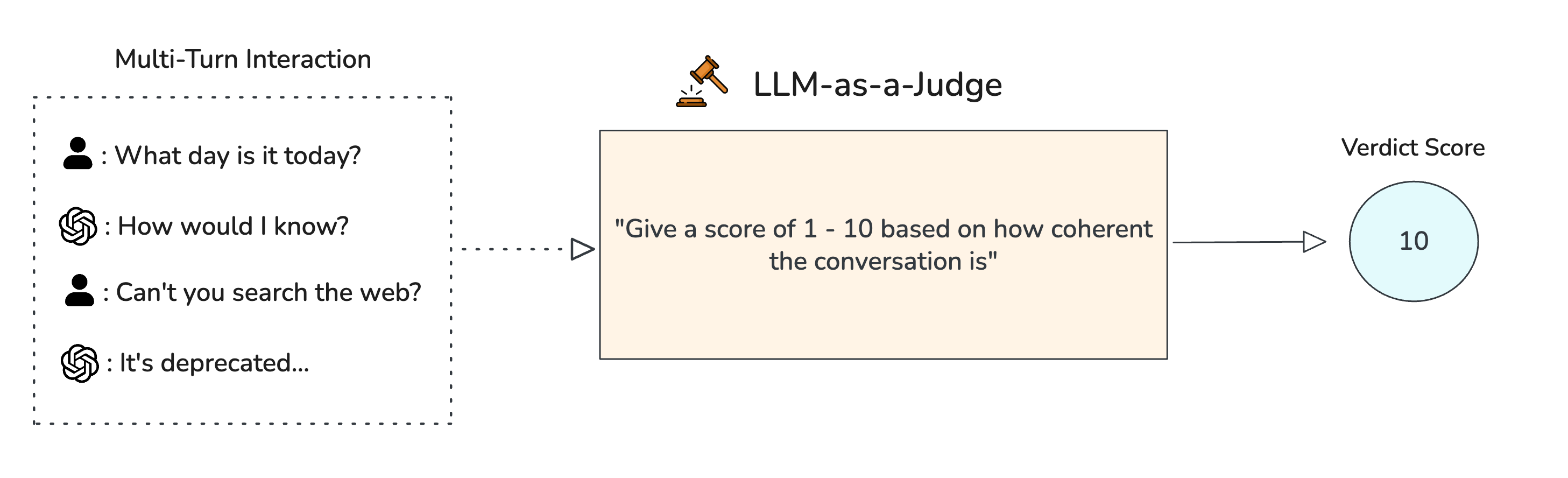

Before we go any further, it is important to understand that LLM-as-a-Judge can be used to evaluate both single and multi-turn use cases.

Single-turn refers to a single, atomic, granular interaction with your LLM app. Multi-turn on the other hand, is mainly for conversational use cases and contains not one but multiple LLM interactions. For example, RAG QA is a single-turn use case since no conversation history is involved, while a customer support chatbot is a multi-turn one.

LLM judges that score single-turn use cases expects the input and output of the LLM system you're trying to evaluate:

On the other hand, using LLM judges for multi-turn use cases involves feeding in the entire conversation into the evaluation prompt:

Multi-turn use cases are less accurate in general because there is a greater chance of context overload. However, there a various techniques to get over this problem, which you can learn more about in LLM chatbot evaluation here.

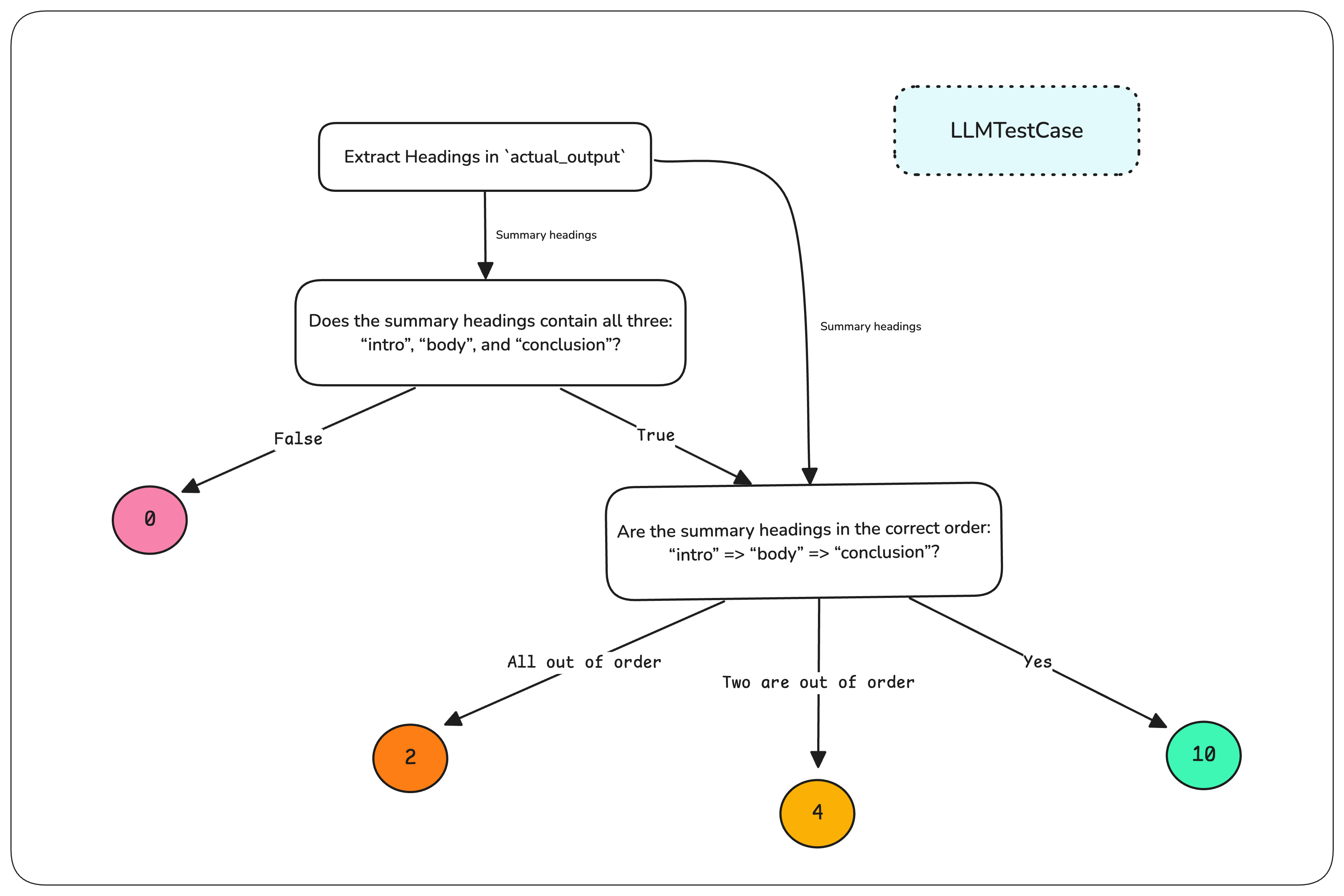

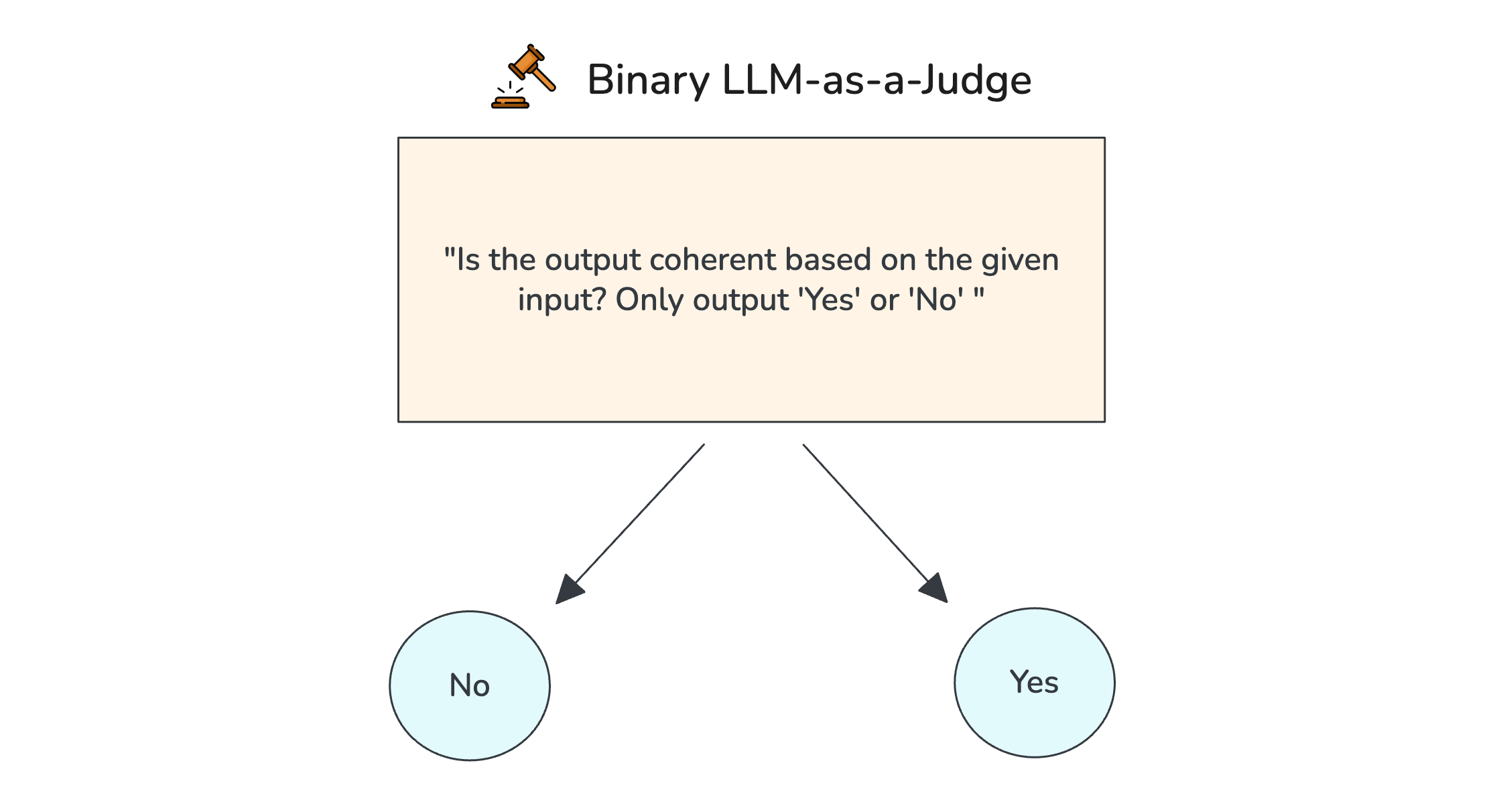

One important thing to note is, LLM judge verdicts doesn't have to be a score between 1 - 5, or 1 - 10. The range is flexible, and can also sometimes be binary, which amounts to a simple "yes" or "no" at times:

Types of LLM-as-a-Judge

As introduced in the "Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena" paper as an alternative to human evaluation, which is often expensive and time-consuming, the three types of LLM as judges include:

Single Output Scoring (without reference): Judge LLM scores one output using a rubric, based only on the input and optional "retrieval context" (and tools that were called).

Single Output Scoring (with reference): Same as above, but with a gold-standard "expected output" to improve consistency.

Pairwise Comparison: Judge LLM sees two outputs for the same input and picks the better one based on set criteria. They let you easily compare models, prompts, and other configs in your LLM app.

⚠️ Importantly, these three judging approaches are not limited to evaluating single-turn prompts; they can also be applied to multi-turn interactions, especially for a chatbot evaluation use case.

Single-output (referenceless)

This approach uses a reference-less metric, meaning the judge LLM evaluates a single output without being shown an ideal answer. Instead, it scores the output against a predefined rubric that might include factors like accuracy, coherence, completeness, and tone.

Typical inputs include:

The original input to the LLM system

Optional supporting context, such as retrieved documents in a RAG pipeline

Optional tools called, nowadays especially for evaluating LLM agents

Example use cases:

Evaluating the helpfulness of customer support chatbot replies.

Scoring fluency and readability of creative writing generated by an LLM.

Because no gold-standard answer is provided, the judge must interpret the criteria independently. This is useful for open-ended or creative tasks where there isn’t a single correct answer.

Single-output (reference-based)

This method uses a reference-based metric, where the judge LLM is given an expected output in addition to the generated output. The reference acts as an anchor, helping the judge calibrate its evaluation and return more consistent, reproducible scores. Typical inputs include:

The original input to the LLM system.

The generated output to be scored.

A reference output, representing the expected or optimal answer.

Example use cases resolve around correctness:

Assessing the answer correctness of LLM-generated math solutions to labelled solution.

Assessing code correctness against a known correct implementation.

The presence of a reference makes this approach well-suited for tasks with clear “correct” answers. It also helps reduce judgment variability, especially for nuanced criteria like factual correctness.

Pairwise comparison

Pairwise evaluation mirrors the approach used in Chatbot Arena, but here the judge is an LLM instead of a human. The judge is given two candidate outputs for the same input and asked to choose which one better meets the evaluation criteria.

](https://images.ctfassets.net/otwaplf7zuwf/37bThxfM2wzpgCRFRmqcr6/51aece27c293cca3c3afeae42b927166/Screenshot_2025-08-21_at_4.17.19_PM.png)

Typical inputs include:

The input to the LLM systems

Two candidate outputs, labeled in random order to prevent bias.

Evaluation criteria defining “better” (e.g., accuracy, helpfulness, tone).

This method avoids the complexity of absolute scoring, instead relying on relative quality judgments. It is particularly effective for A/B testing models, prompts, or fine-tuning approaches — and it works well even when scoring rubrics are subjective.

Example use cases:

Determining whether GPT-4 or Claude 3 produces better RAG summaries for the same query.

Choosing between two candidate marketing email drafts generated from the same brief.

If you stick till the end, I'll also show you what an LLM-Arena-as-a-Judge looks like.

Effectiveness of LLM-as-a-Judge

LLM-as-a-Judge often aligns with human judgments more closely than humans agree with each other. At first, it may seem counterintuitive - why use an LLM to evaluate text from another LLM?

The key is separation of tasks. We use a different prompt - or even a different model - dedicated to evaluation. This activates distinct capabilities and often reduces the task to a simpler classification problem: judging quality, coherence, or correctness. Detecting issues is usually easier than avoiding them in the first place.

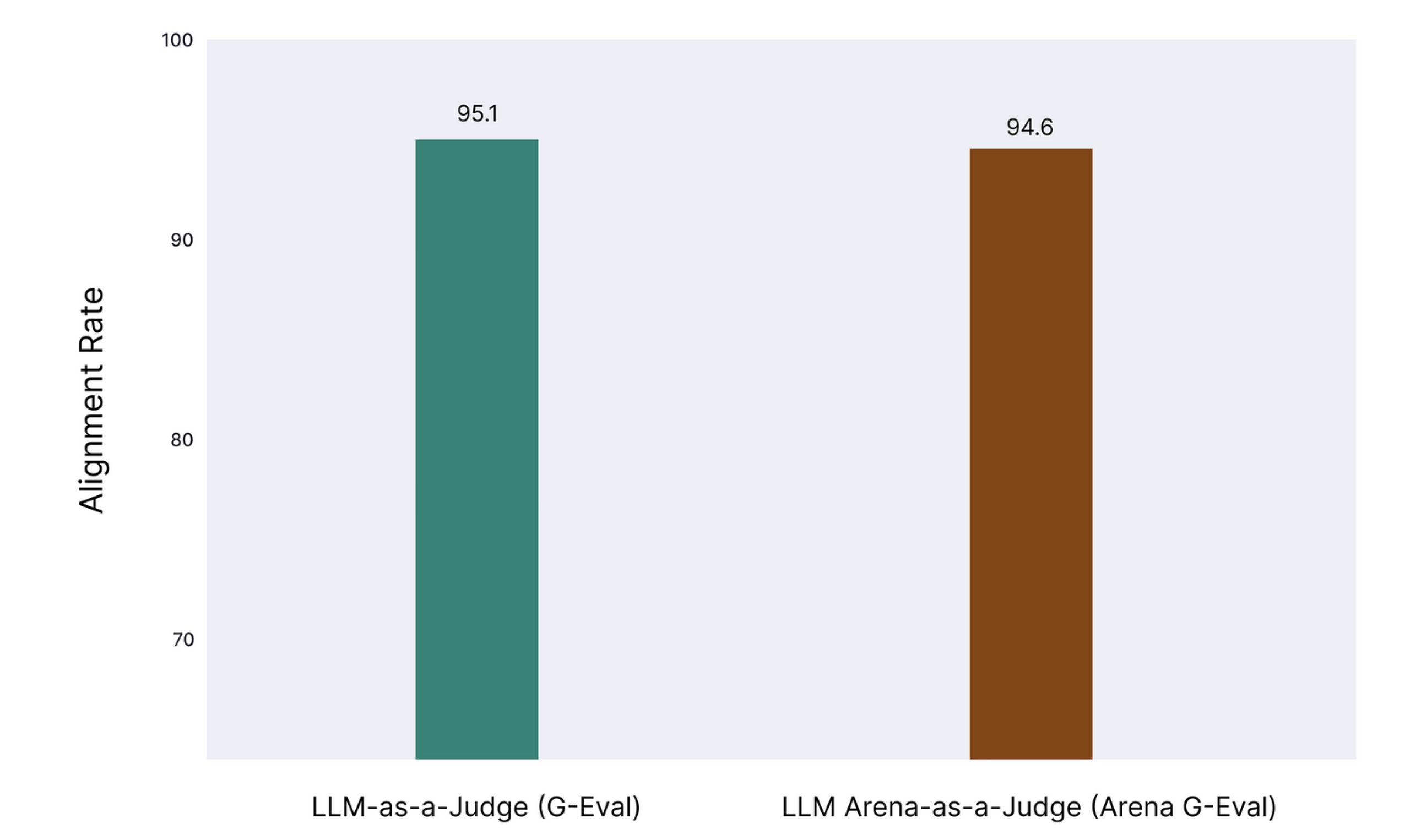

Our testing at Confident AI shows LLM-as-a-Judge, whether single-output or pairwise, achieves surprisingly high alignment with human reviewers.

Our team aggregated annotated test cases from customers (in the form of thumbs up, thumbs down) over the past month, split the data in half, and calculated the differences in agreement between humans, and regular/arena based LLM-as-a-judge separately (implemented via G-Eval, more on this later).

What are the alternatives?

While this section arguably shouldn’t exist, two commonly preferred - but often flawed - alternatives to LLM-based evaluation are worth addressing.

Human Evaluation

- While often considered the “gold standard” for its ability to capture context and nuance, it’s slow, costly, inconsistent, and impractical at scale (e.g., ~52 full days per month to review 100k responses).

Traditional NLP Metrics (e.g., BERT, ROUGE)

- Fast and cheap but require a reference text and often miss semantic nuances in open-ended, complex outputs; both human and traditional methods also lack explainability.

Also, both human and traditional NLP evaluation methods also lack explainability, which is the ability to explain the evaluation score it has given. With that in mind, let’s go through the effectiveness of LLM judges and their pros and cons in LLM evaluation.

Confident AI: The DeepEval LLM Evaluation Platform

The leading platform to evaluate and test LLM applications on the cloud, native to DeepEval.